About Me

I am a PhD candidate at ZJU Robotics Lab (ZJURL), Zhejiang University since 2015. My research interest is focused on visual localization. Besides, I am interested in everything in robotics.

I am now a visitor in Autonomous Systems Lab (ASL), ETH Zurich (since Sept. 2019), and I visited UTS Centre for Autonomous Systems (CAS) from Oct. 2017 to Jan. 2018.

Publications

Journals:

-

Huifang Ma, Yue Wang, Rong Xiong, Sarath Kodagoda, and Li Tang.

Deepgoal: learning to drive with driving intention from human control demonstration.

Robotics and Autonomous Systems, pages 103477, 2020.

[pdf]

[bib]

@article{ma2020deepgoal,

author = "Ma, Huifang and Wang, Yue and Xiong, Rong and Kodagoda, Sarath and Tang, Li",

title = "DeepGoal: Learning to drive with driving intention from human control demonstration",

journal = "Robotics and Autonomous Systems",

pages = "103477",

year = "2020",

publisher = "Elsevier"

} -

Bo Fu, Yue Wang, Xiaqing Ding, Yanmei Jiao, Li Tang, and Rong Xiong.

Lidar–camera calibration under arbitrary configurations: observability and methods.

IEEE Transactions on Instrumentation and Measurement, 69(6):3089–3102, 2019.

[pdf]

[bib]

@article{fu2019lidar,

author = "Fu, Bo and Wang, Yue and Ding, Xiaqing and Jiao, Yanmei and Tang, Li and Xiong, Rong",

title = "LiDAR--Camera Calibration under Arbitrary Configurations: Observability and Methods",

journal = "IEEE Transactions on Instrumentation and Measurement",

volume = "69",

number = "6",

pages = "3089-3102",

year = "2019",

publisher = "IEEE"

} -

Li Tang, Yue Wang, Xiaqing Ding, Huan Yin, Rong Xiong, and Shoudong Huang.

Topological local-metric framework for mobile robots navigation: a long term perspective.

Autonomous Robots, 43(1):197–211, 2019.

[pdf]

[bib]

@article{tang2019topological,

author = "Tang, Li and Wang, Yue and Ding, Xiaqing and Yin, Huan and Xiong, Rong and Huang, Shoudong",

title = "Topological local-metric framework for mobile robots navigation: a long term perspective",

journal = "Autonomous Robots",

volume = "43",

number = "1",

pages = "197--211",

year = "2019",

publisher = "Springer",

priority = "0"

}

Conferences:

-

Li Tang, Yue Wang, Qianhui Luo, Xiaqing Ding, and Rong Xiong.

Adversarial feature disentanglement for place recognition across changing appearance.

In 2020 International Conference on Robotics and Automation (ICRA), accepted. IEEE, 2020.

[pdf]

[code]

[bib]

@inproceedings{tang2020adversarial,

author = "Tang, Li and Wang, Yue and Luo, Qianhui and Ding, Xiaqing and Xiong, Rong",

title = "Adversarial Feature Disentanglement for Place Recognition Across Changing Appearance",

booktitle = "2020 International Conference on Robotics and Automation (ICRA)",

pages = "accepted",

year = "2020",

organization = "IEEE",

code = "https://github.com/dawnos/fdn-pr",

priority = "0"

} -

Xiaqing Ding, Yue Wang, Dongxuan Li, Li Tang, Huan Yin, and Rong Xiong.

Laser map aided visual inertial localization in changing environment.

In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 4794–4801. IEEE, 2018.

[pdf]

[bib]

@inproceedings{ding2018laser,

author = "Ding, Xiaqing and Wang, Yue and Li, Dongxuan and Tang, Li and Yin, Huan and Xiong, Rong",

title = "Laser map aided visual inertial localization in changing environment",

booktitle = "2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)",

pages = "4794--4801",

year = "2018",

organization = "IEEE",

priority = "1"

} -

Li Tang, Xiaqing Ding, Huan Yin, Yue Wang, and Rong Xiong.

From one to many: unsupervised traversable area segmentation in off-road environment.

In 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), 787–792. IEEE, 2017.

[pdf]

[bib]

@inproceedings{tang2017one,

author = "Tang, Li and Ding, Xiaqing and Yin, Huan and Wang, Yue and Xiong, Rong",

title = "From one to many: Unsupervised traversable area segmentation in off-road environment",

booktitle = "2017 IEEE International Conference on Robotics and Biomimetics (ROBIO)",

pages = "787--792",

year = "2017",

organization = "IEEE",

priority = "0"

}

Projects

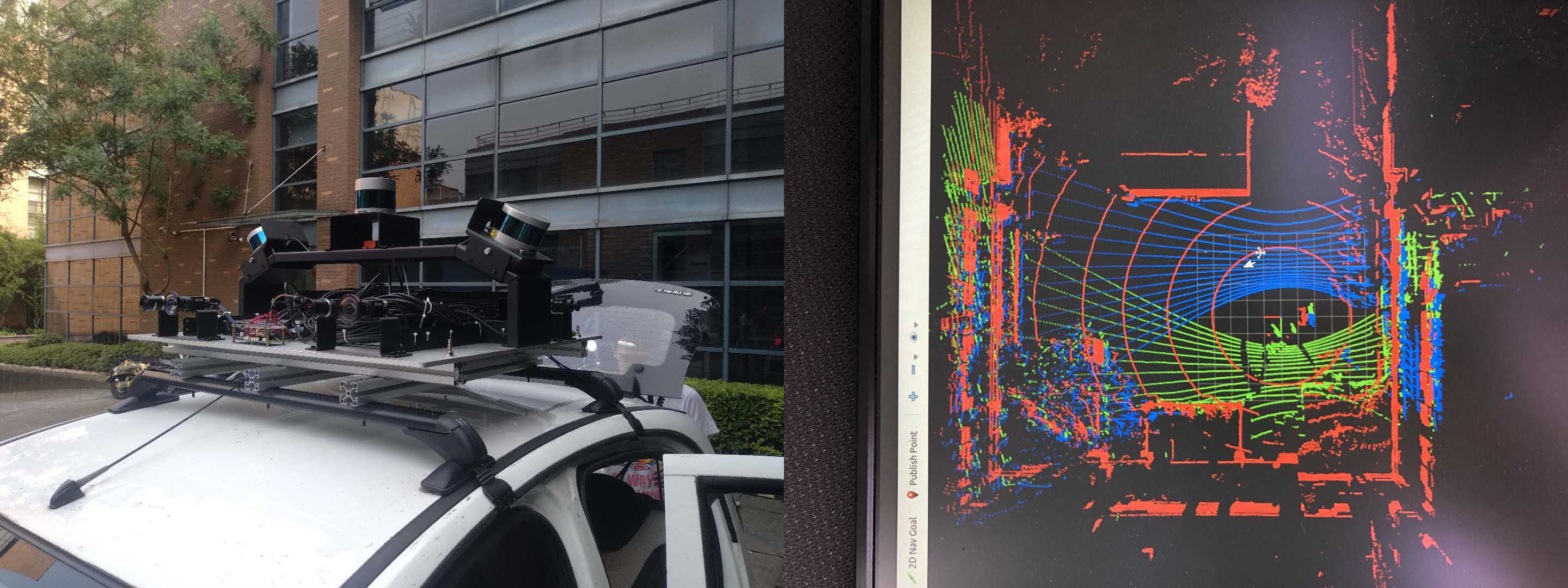

Autonomous Driving Platform

To collect data for research topics on long-term autonomous driving, we developed a sensory platform on a car. The platform is equipped with multiple sensors (3D LiDARs, cameras, IMU, INS). Currently it has one 32-line 3D LiDAR on the top, two 16-line 3D LiDARs on the sides, two stereo cameras facing forward, three fisheye cameras facing left/right/rear, and an IMU. It also has an INS for ground truth.

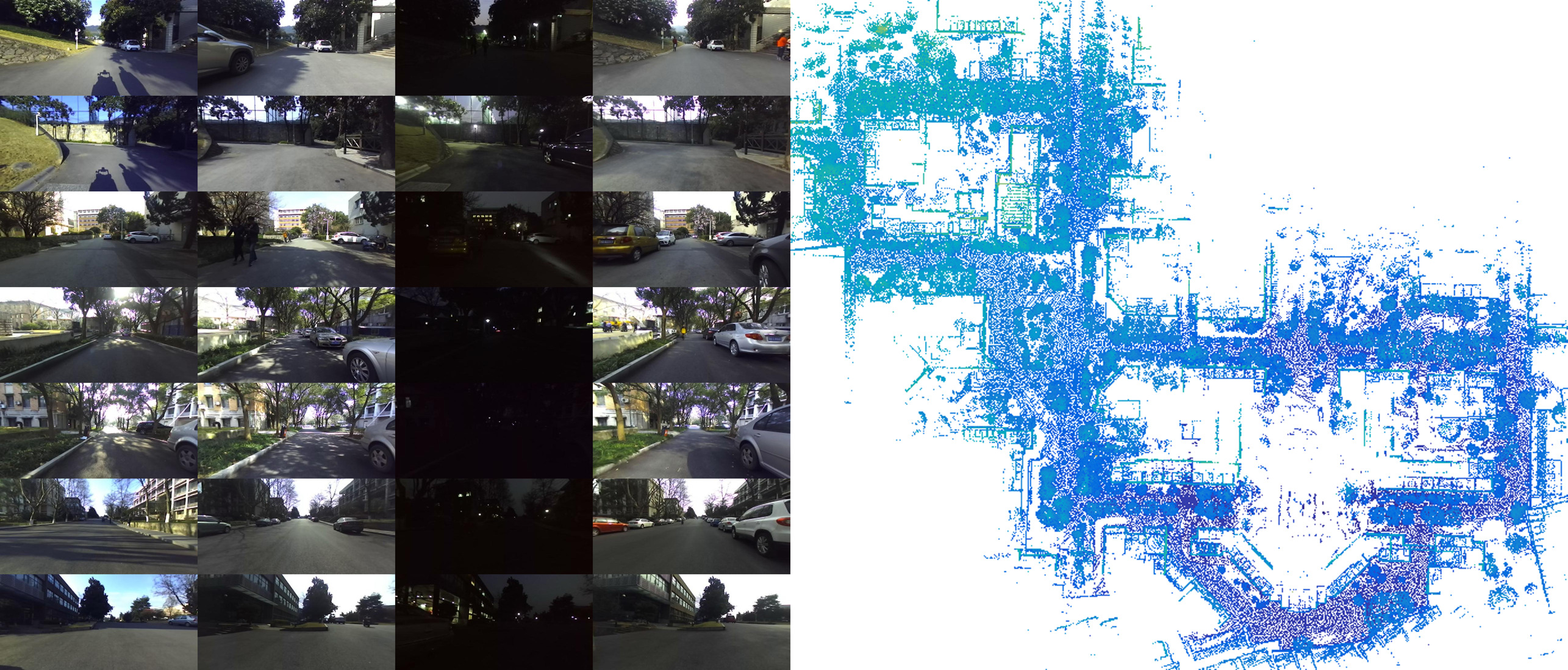

YQ21: Dataset for Long-term Localization

To investigate the persistent autonomy of mobile robot, I collected a dataset with multiple sensors (3D LiDAR, IMU, a short range stereo camera and a long range stereo camera) on the same route at different time in 3 days. The dataset captures different variation of the same places, which can be used in with different research topics (e.g. SLAM, place recognition, semantic segmentation, etc.).

Delivery Mobile Robot

Collaborated with JD.com to develop a last mile delivery robot. The robot is targeted at long-term autonomous navigation in off-road environment (e.g. campus, community). This Ackermann platform based robot has a interface for users to pick up parcels. 3D LiDAR, IMU and stereo camera are used for mapping and localization. 2D LiDAR is mounted at the bottom for obstacle avoidance. The crucial challenge lies in long-term localization.

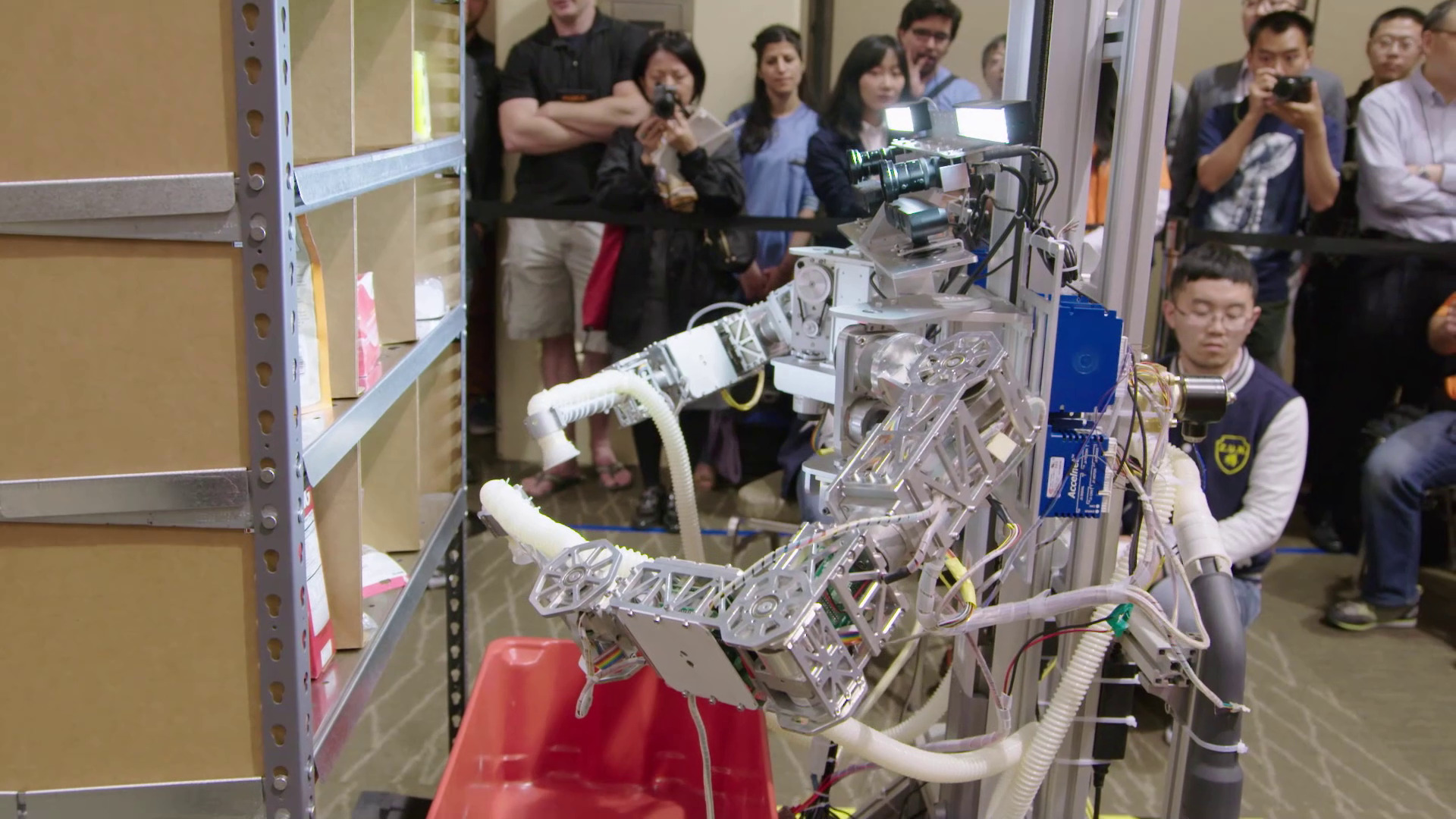

Amazon Picking Challenge

Cooperated with UTS (University of Technology Sydney) to participate in Amazon Picking Challenge (APC) on ICRA2015. Robots in APC are intended for packing and sorting in logistics. In APC, participants are required to design a robot to finish shelf-picking task according to a given shopping list. Our team has designed a two-arm robot. It can grasp multiple objects simultaneously, which increases efficiency by minimizing movement and expanding work space. RGB and depth cameras are mounted on the head for perception. The primary challenges for perception are perspective and limited field of view.