YQ21: Dataset for Long-term Localization

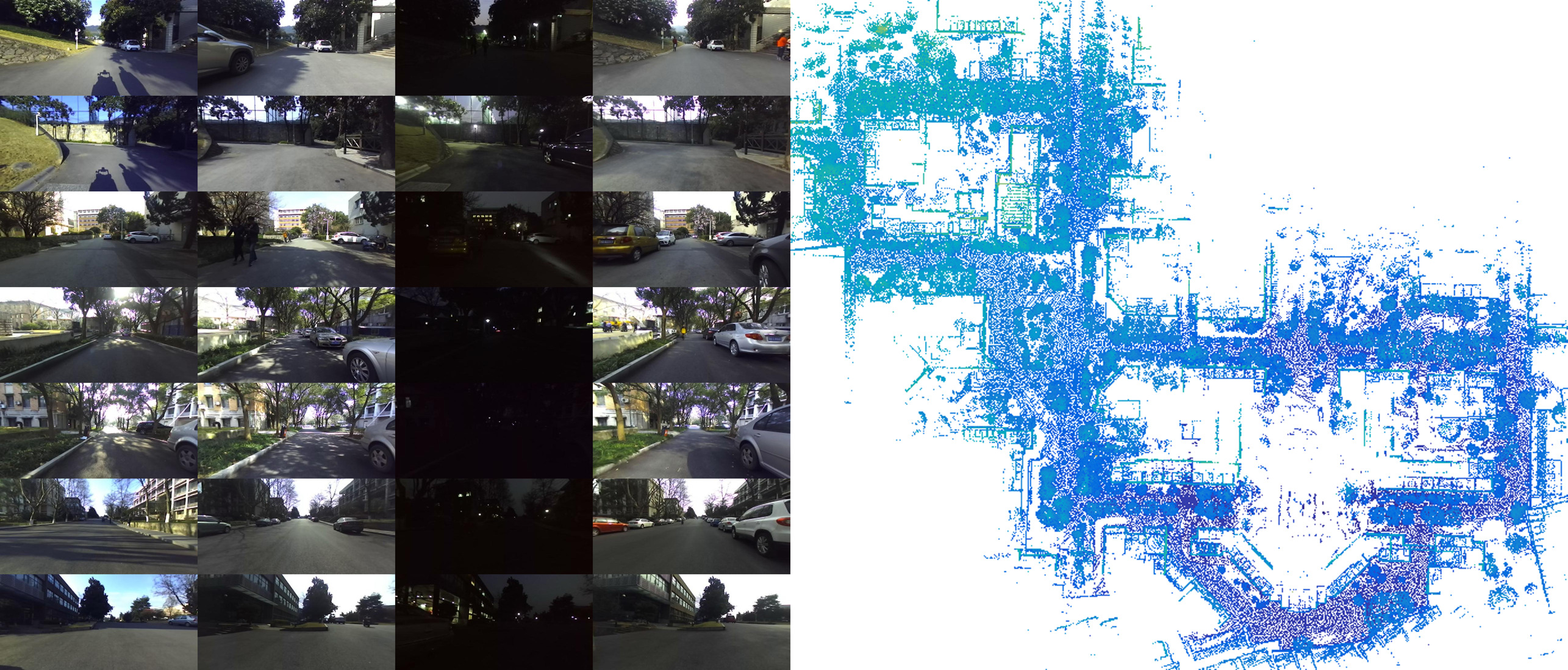

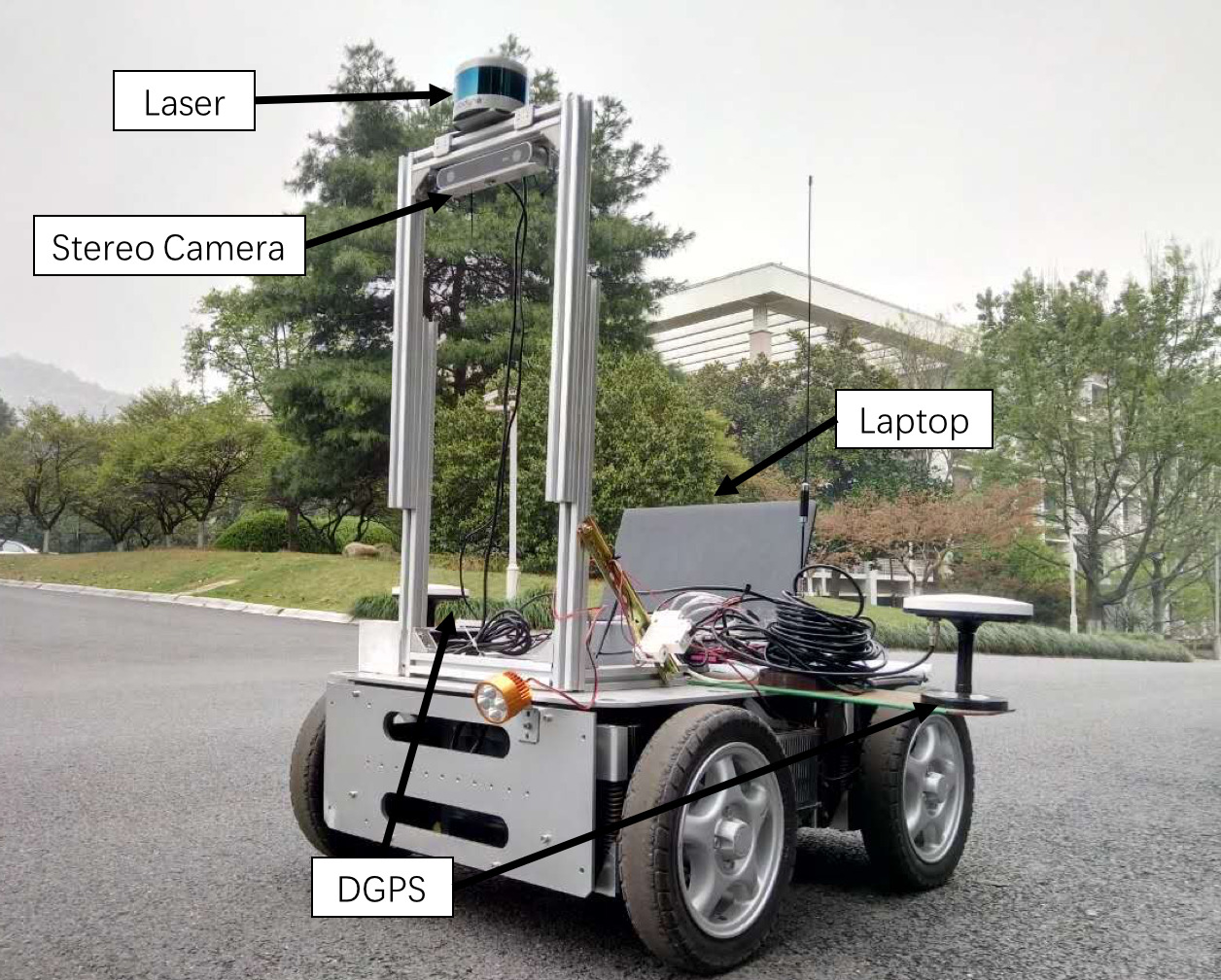

To investigate the persistent autonomy of mobile robot, I collected a dataset with multiple sensors (3D LiDAR, IMU, a short range stereo camera and a long range stereo camera) on the same route at different time in 3 days. The dataset captures different variation of the same places, which can be used in with different research topics (e.g. SLAM, place recognition, semantic segmentation, etc.).

Data

| Session | OneDrive | Baidu Disk |

|---|---|---|

| 2017/03/03 07:52:31 | link | link, n7g9 |

| 2017/03/03 09:20:13 | link | link, y0wy |

| 2017/03/03 10:23:11 | link | link, ec5t |

| 2017/03/03 11:48:03 | link | link, snoi |

| 2017/03/03 12:59:16 | link | link, qn85 |

| 2017/03/03 14:34:43 | link | link, go64 |

| 2017/03/03 16:05:54 | link | link, dn5b |

| 2017/03/03 17:38:14 | link | link, sa8z |

| 2017/03/07 07:43:30 | link | link, psw8 |

| 2017/03/07 09:06:04 | link | link, qmqh |

| 2017/03/07 10:19:45 | link | link, 3vx7 |

| 2017/03/07 12:40:29 | link | link, nfo7 |

| 2017/03/07 14:35:16 | link | link, 7l5c |

| 2017/03/07 16:28:26 | link | link, arjt |

| 2017/03/07 17:25:06 | link | link, 0s3o |

| 2017/03/07 18:07:21 | link | link, zfce |

| 2017/03/09 09:06:05 | link | link, 4b61 |

| 2017/03/09 10:03:57 | link | link, 7gak |

| 2017/03/09 11:25:40 | link | link, k3pe |

| 2017/03/09 15:06:14 | link | link, 8ksj |

| 2017/03/09 16:31:34 | link | link, lmj8 |

Intrinsics & Extrinsics

| OneDrive | Baidu Disk | |

|---|---|---|

| Intrinsics of cameras | link | link, fw56 |

| Extrinsics between cameras and laser | link | link, 5sb4 |

More information

I designed the sensory platform of the robot, along with firmware for collecting data (sensors driving, synchronization and storage). The robot was driven manually on the same route over 1km in our campus at different time to collect data. 21 sessions of sensory data were collected in spring as training set, while 3 in autumn and 1 in winter as test set. There are some research works conducted based on this data set, such as vision-based localization[1], laser-aid visual inertial localization[3], laser mapping[4] and global localization [5][6], and traversable area segmentation[2].

Conventionally, NCLT dataset provided by the University of Michigan is the first dataset for this task. But it there is no stereo vision data in the dataset, which is important for visual navigation. Our dataset is collected on a custom robot with multiple sensors on the same route in different time. The robot is equipped with a on the top. An RTK-GPS is used to provide ground truth.